Using Access Control Lists To Manage Fine-Grain Data Lake Permissions

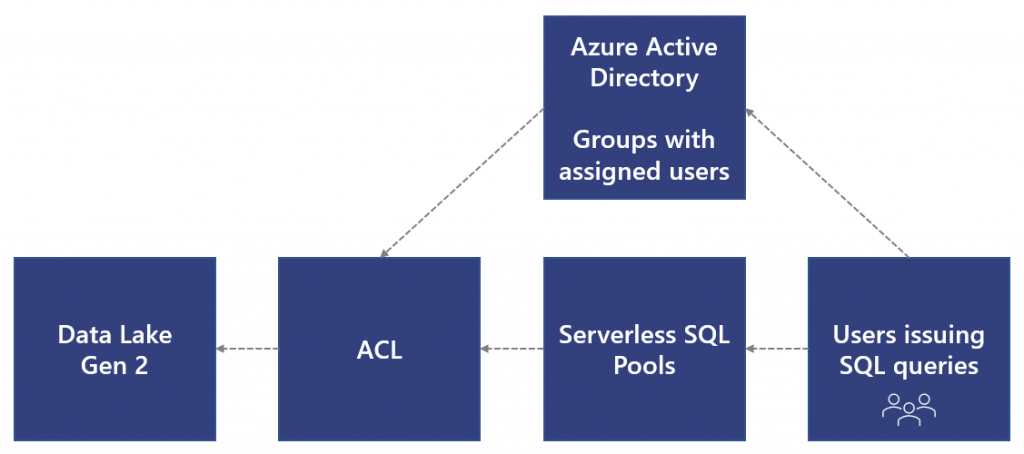

When data is stored in Azure Data Lake Gen2, we can use Azure Active Directory and Access Control Lists (ACLs) to control who can access this data in containers and folders. With Serverless SQL Pools we are able to connect to data in the Data Lake using Azure Active Directory (AAD) credentials and run SQL commands. The user context is passed through to the Data Lake by Serverless SQL Pools and permissions are evaluated by the ACLs assigned to the relevant containers and folders. Using ACLs is called fine-grain access control as we are able to control access at the folder level within the Data Lake.

In this blog post we’ll walkthrough how to use ACLs to control access to CSV data stored in a Data Lake Gen 2 container. We’ll look at a scenario which provides certain access for certain users and how to set this up in Azure Active Directory, Azure Data Lake Gen 2, then querying using Serverless SQL Pools.

Thanks to Business Intelligence Architect Johannes Vink for investigating fine-grained access control and inspiring this blog post.

Considerations

Microsoft have stated in their best practices document that using Active Directory authentication may result in slower performance, please be aware of this during testing.

Scenario

In this scenario we are going to be assigning Data Lake permissions to a group of users as follows:

- Senior Data Analyst: This user has access to all the sales data available

- Data Analyst (Europe): This user has access only to Europe sales data

- Data Analyst (North America): This user has access to only North American sales data

We will not be assigning users direct permissions to the Data Lake, we will be using Active Directory Groups to assign users to, then assign the groups to the Data Lake. This ensures we can setup permissions on the Data Lake once, then modify the users in the groups.

Architecture

The image below shows the architecture in terms of the Azure Services used:

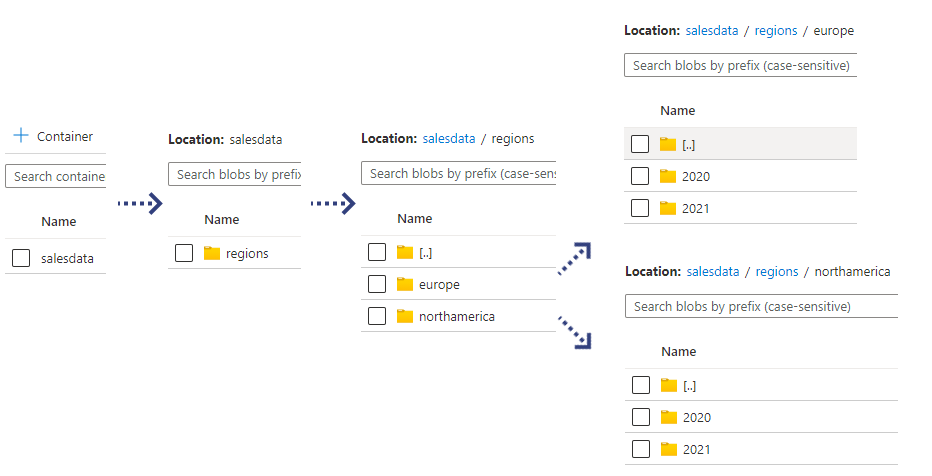

Data Lake Structure

The following container/folder structure will be used in this scenario. The year folders contain 1 CSV file each containing relevant sales data, therefore a total of 4 CSV files.

- 1 Container called salesdata

- 2 sub-folders called europe and northamerica

- Within each region sub-folder, 2 sub-folders called 2020 and 2021

- Within each year sub-folder, 1 CSV file

Permission Requirements

The requirements are that the Senior Data Analyst must be assigned read permissions to the regions folder and all sub-folders, whilst each Data Analyst must be assigned read permissions only to their sales region. All Data Analysts must be able to read from all year sub-folders in their region, including new year folders as they are added.

Alternatives

In this scenario as we are providing access to the underlying Data Lake Gen2 containers/folders, a user has permissions to access the relevant containers/folders outside of Serverless SQL Pools. This may not be suitable for certain environments/infrastructure/security considerations. Microsoft Program Manager Jovan Popovic has written about other methods:

- How to implement row-level security in serverless SQL pools

- Securing access to ADLS files using Synapse SQL permission model

Role-Based Access Control vs Access Control Lists

Role-Based Access Control (RBAC) allows a security principal (a user, group, application) to be added to a specific Azure role such as Storage Blob Data Reader which can then allow that security principal to access the data. However, this is called coarse-grain access control as although permissions can be assigned to storage accounts and containers, it does not allow control over specific folders. If we were to apply RBAC permissions to the 3 data analyst roles, all 3 users could see all the data within the salesdata container.

However, ACLs can be assigned at the folder and file level providing fine-grain access control over data within the Data Lake. It’s important to note that ACLs cannot restrict permissions, if RBAC permissions have been granted then these will override any ACLs in place as role assignments are always evaluated first.

Prerequisites

The following permissions are required for the technical walkthrough.

- Access Azure Active Directory to create groups and assign users

- Access Azure Data lake Gen2 to create containers/folders and assign ACL permissions

- Access a Serverless SQL Pools database and issue SQL statements using Synapse Analytics Studio, SSMS, or Azure Data Studio

Walkthrough

We’ll now go through the steps required to meet the scenario requirements. This scenario assumes that the user accounts already exist in Azure Active Directory.

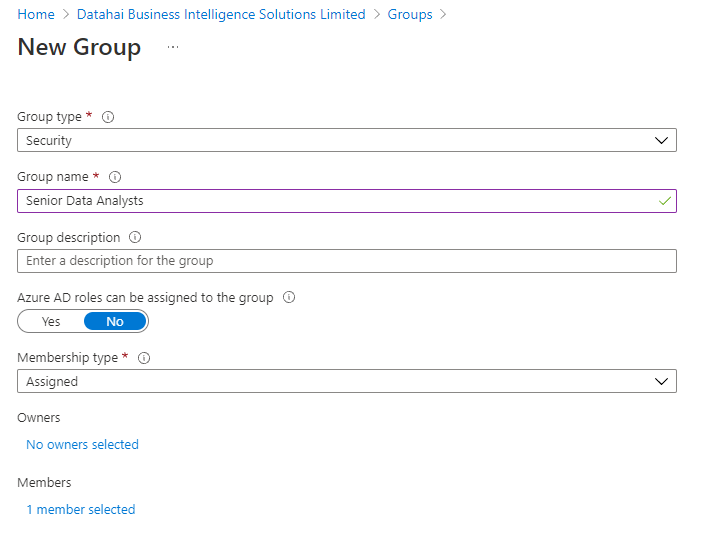

Create Active Directory Groups

We’ll create 3 groups which we’ll use in the Data Lake ACL permissions assignment.

- Login to https://portal.azure.com

- Search for Azure Active Directory and click on the service

- Click on Groups under the Manage area

- Click New Group and enter the following information:

- Group Type: Security

- Group Name: Senior Data Analysts

- Azure AD roles can be assigned to the group: No

- Membership Type: Assigned

- Click the No members selected link under the Members section and add in a user account. For this scenario we have an existing user called seniordataanalyst

- Click Create

Follow the steps above to create 2 further groups to separate “region” access, with the group names of:

- Data Analysts Europe

- Data Analysts North America

For this scenario we added an appropriate user account to each of the region groups.

Assign ACL Permissions

Once the Active Directory groups are in place and appropriate users added to each group, we can now assign those groups to Access Control Lists in Azure Data Lake Gen2. In this section we’ll look at how to assign ACL permissions to existing folders using 2 methods, using the Azure Portal and by using PowerShell. The reason for this is to highlight the fact that we need to assign permissions to each folder level in the folder hierarchy when the folders already exist, we cannot simply add permissions to a top-level folder and all child folders will be automatically assigned permissions. Later in this blog, we’ll use default permissions to ensure any new folders and files are given the appropriate permissions automatically.

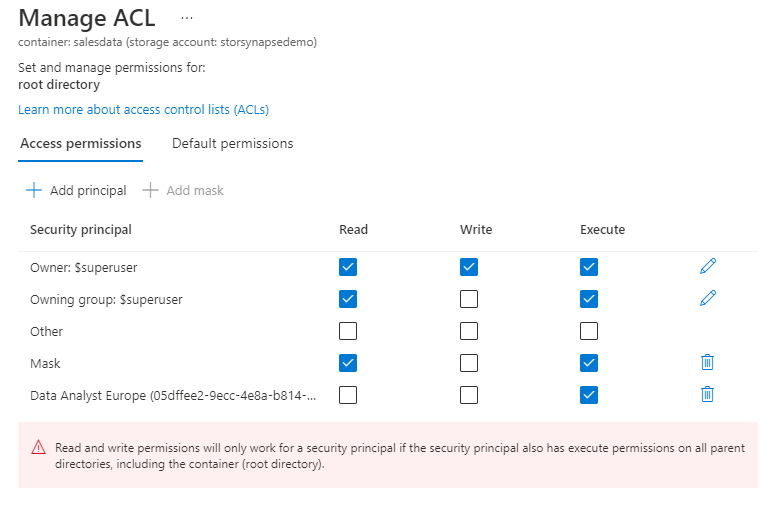

Assign Permissions using Azure Portal

We’ll assign permissions to the Data Analyst Europe group now to ensure this group can read data from the salesdata/regions/europe folder.

- Login to https://portal.azure.com

- Browse to a storage account enabled for Data Lake Gen2 (Hierarchical Namespace enabled), in this scenario we have a storage account called regionalsalesdataacldemo

- Right-click the salesdata container and select Manage ACL

- Click Add principal and search for the group Data Analysts Europe

- Click Select to add the user to the group

- In the permissions grid, enable Execute permissions then click save.

We must now add Read and Execute permissions to the europe sub-folder and also both the 2020 and 2021 sub-folders. Follow the process described above to add the Data Analysts Europe group to the ACL list on these sub folders.

One final step is to add Read permissions on the Sales – Europe – 2020.csv and Sales – Europe – 2021.csv files within the 2020 and 2021 folders.

Test Permissions

Now that we have assigned permissions to the europe data to the Data Analyst Europe group, we can now test these permissions using Synapse Studio and Serverless SQL Pools.

Set-up Serverless SQL Pools database

- Login to https://portal.azure.com

- Browse to the Synapse Analytics instance and open Synapse Studio.

- Select the Develop tab and create a new SQL script, run the following script to set-up the new database and create the connection to the storage account. Note that the CREATE DATABASE SCOPED CREDENTIAL uses the identity User Identity, this ensures that the active directory user identity logging into Synapse Analytics and running the SQL query is passed through to the Data Lake storage account where ACL permissions can be evaluated.

--Create new serverless sql database

CREATE DATABASE sqlacldemo;

--switch to new database

USE sqlacldemo;

--Create Master key in database

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'dfADFADf454325adsfadsf';

--create active directory database scoped credential

CREATE DATABASE SCOPED CREDENTIAL SynapseUserIdentity

WITH IDENTITY = 'User Identity';

--add UTF8

ALTER DATABASE sqlacldemo COLLATE Latin1_General_100_BIN2_UTF8;

--create external data source

CREATE EXTERNAL DATA SOURCE ExternalDataSourceSalesData

WITH (

LOCATION = 'https://regionalsalesdataacldemo.dfs.core.windows.net/salesdata'

);Query Data Lake Data

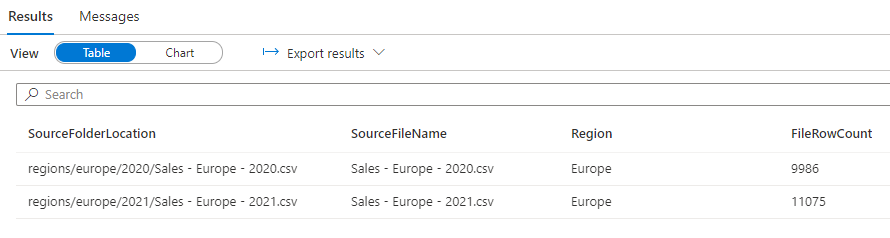

Once the new Serverless SQL Pools database and connections to the Data Lake are setup, we can run a query as any user who has been added to the Data Analysts Europe group. Run the SQL query below to return the europe data only. Note that in the OPENROWSET BULK property, we have added regions/**, this means that Serverless SQL Pools will scan all sub-folders under the regions folder, the ACL permissions will therefore be evaluated to return the appropriate data

SELECT fct.filepath() AS SourceFolderLocation,

fct.filename() AS SourceFileName,

fct.Region,

COUNT(*) AS FileRowCount

FROM

OPENROWSET

(

BULK 'regions/**',

DATA_SOURCE = 'ExternalDataSourceSalesData',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|'

) AS fct

GROUP BY fct.filepath(),

fct.filename(),

fct.RegionIf permissions have been applied correctly, then the results should appear as follows:

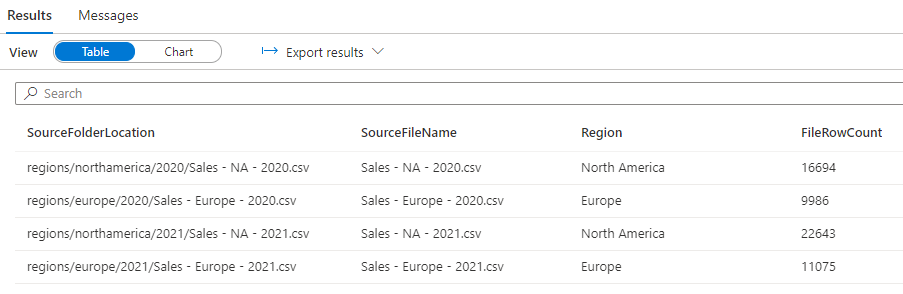

If we were to repeat the steps above but instead assign the appropriate ACLs to the Data Analysts North America group to the regions/northamerica/ folders and sub-folders then the users assigned to the Data Analysts North America group would only be able to read data from those folders.

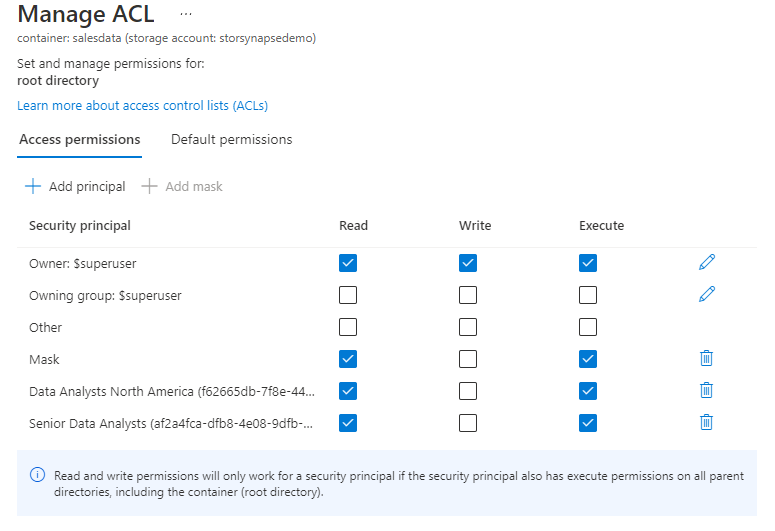

Recursively Add Permissions

Although the process above works to assign permissions, it is a manual process in which all existing folders, sub-folders, and files need permissions adding. We can now assign ACL permissions recursively across the relevant folders using PowerShell (other methods are also available here) which will reduce the administrative effort. We will now update the ACL permissions recursively to allow the Senior Data Analysts group to read data from the regions/ folder and all sub-folders.

In the PowerShell code below, we connect to a storage account using Active Directory. Please note that the user running these commands needs to be assigned the role Storage Blob Data Owner on the storage account container. The $groupID is the GUID of the security group which is visible in the Azure Active Directory service.

Please note that we are running the Update-AzDataLakeGen2AclRecursive PowerShell command which will keep all existing ACL permissions in place, and will now add in the Senior Data Analyst group to the root container and all folders/files.

Connect-AzAccount

Select-AzSubscription -SubscriptionId xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

$ctx = New-AzStorageContext -StorageAccountName 'storsynapsedemo' -UseConnectedAccount

$filesystemName = "salesdata"

$dirname = "/"

$groupID = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx";

$acl = Set-AzDataLakeGen2ItemAclObject -AccessControlType user -EntityId $groupID -Permission r-x

Update-AzDataLakeGen2AclRecursive -Context $ctx -FileSystem $filesystemName -Acl $aclOnce the permissions have been assigned then the ACL permissions on each folder should now show as the following:

If any user within the Senior Data Analyst group runs a SELECT command in Synapse Studio, they will see all the regions sales data:

Assign Default ACL Permissions

The steps above have all assigned permissions to existing folders and files within the Data Lake container. When new folders and files are created, a set of default permissions are applied. We can follow the same methods above in that we can use the Azure Portal and PowerShell to add/update the ACLs.

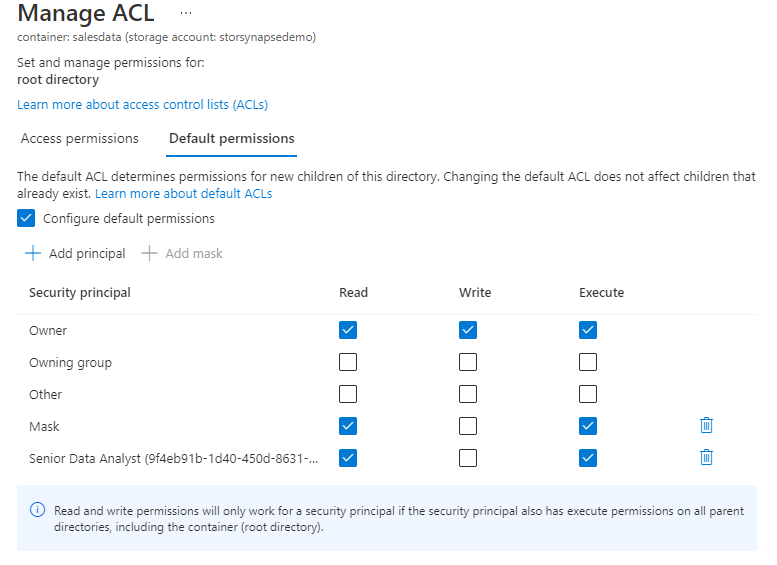

Assign Default Permissions using Azure Portal

- Right-click the salesdata container and select Manage ACL

- Select Default permissions then enable Configure default permissions

- Click Add principal and search for the group Senior Data Analysts

- Click Select to add the user to the group

- In the permissions grid, enable Read and Execute permissions then click save.

This sets the default permissions for any new folders or files created in the salesdata container. To create default permissions for other folders and sub-folders, the process above must be repeated. We can use PowerShell to set default permissions and can also apply this recursively, please note that we add the parameter -DefaultScope to the $acl assignment command.

Connect-AzAccount

Select-AzSubscription -SubscriptionId xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

$ctx = New-AzStorageContext -StorageAccountName 'storsynapsedemo' -UseConnectedAccount

$filesystemName = "salesdata"

$dirname = "/"

$groupID = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx";

$acl = Set-AzDataLakeGen2ItemAclObject -AccessControlType user -EntityId $groupID -Permission r-x -DefaultScope

Update-AzDataLakeGen2AclRecursive -Context $ctx -FileSystem $filesystemName -Acl $aclOnce the default permissions have been applied reclusively, each folder and sub-folder should now have the following:

The PowerShell command can also set the default permissions for the Data Analysts Europe and Data Analysts North America groups by using the appropriate group ID and the folder level.

Conclusion

In this blog post we have looked at how to use Access Control Lists to control access to data in an Azure Data Lake Gen2 storage account. We have then seen how Synapse Analytics Serverless SQL Pools can pass-through the Active Directory user to the storage account and have permissions evaluated at the container and folder level.

References

- https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-access-control

- https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-access-control-model

- https://docs.microsoft.com/en-us/sql/t-sql/statements/execute-as-transact-sql?view=sql-server-ver15

- https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-acl-powershell