Exploring Fabric OneLake vs Azure Data Lake Gen2 Storage Accounts

Overview

Recently I’ve been reading through Microsoft’s documentation on implementing medallion lakehouse architecture in Microsoft Fabric (doc is here) and thinking about how to structure the workspaces & lakehouses to deliver that methodology. It’s a great article and well worth reading. The one thing that stuck in my mind though is all about OneLake. Now, you’ll frequently see this marketing message from Microsoft.

OneLake is a single, unified, logical data lake for your whole organization.

Microsoft

What does this actually mean and btw does “Data Lake” sound familiar? Yes, there is an existing service called Azure Data Lake Gen2 (ADLS Gen2) in Azure…So is OneLake a completely different technology to ADLS Gen2? Put simply no, it’s just that OneLake is a SaaS (Software-as-a-Service) version of ADLS Gen2, which is a PaaS (Platform-as-a-Service). OneLake supports the ADLS Gen2 APIs so those tools like Azure Storage Explorer will work with it. I like to think that OneLake is an abstraction over ADLS Gen2 and not something fundamentally different. Having said that, MS are putting a whole bunch of new features into OneLake via Fabric so the services will differ in what you can and can’t do.

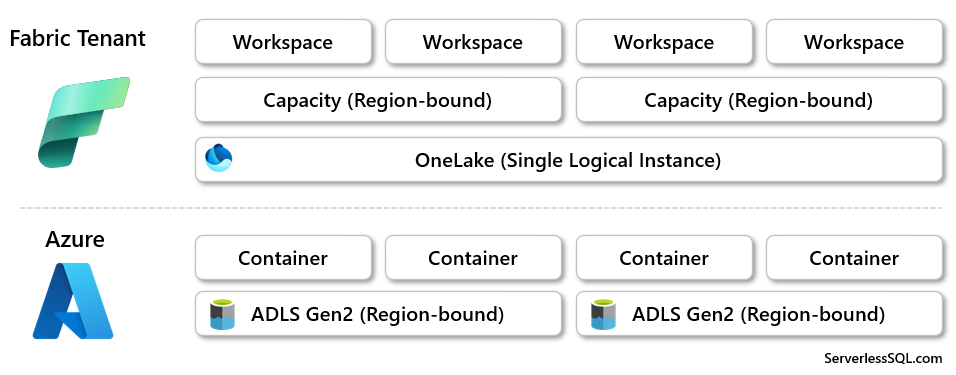

You get a single OneLake per Fabric tenant (the name kinda suggests that…), this concept of single storage across a single Fabric tenancy interests me because in previous Data Lake architectures, multiple Azure Data Lake Gen2 (ADLS Gen2) storage accounts could be created to handle a particular workload (or zone in terms of medallion architecture). How does this work in Fabric? The documentation above tells us that we can create multiple workspaces…but where does the data sit? Do we have to worry about region-specific data? Let’s take a look.

Workspaces Are Data Lake Containers

This is the first concept that I think is important to understand. When creating Fabric Workspaces , you are creating a Container within OneLake. It’s exactly like creating a Container in an Azure Data Lake Gen2 (ADLS Gen2) storage account. The difference here is that in ADLS Gen2 we could create multiple storage accounts and create multiple containers within those accounts. In a Fabric tenancy there is only a single OneLake instance which is accessed using a single URL. To access a specific workspace (container), the workspace name (or GUID) is used with the OneLake URL.

E.G. we can use a tool like Azure Storage Explorer to access a Workspace by using the URL below (Fabric_OneLake_Test being a workspace I created in Fabric).

- https://onelake.dfs.fabric.microsoft.com/Fabric_OneLake_Test

One thing to note here is this is the global URL, to ensure your data never leaves the region if querying the data from a different region, use the region specific URL based on the region the Fabric Capacity is located in E.G. https://uksouth-onelake.dfs.fabric.microsoft.com.

Region-Bound

What about regions? When you create an ADLS Gen2 account in Azure, you state which region that storage account is to be created in (E.G. UK South, East US etc.). This allows you to ensure that any data with specific compliance specifications is stored in the relevant region. In Fabric, we can’t tell a Workspace which region to be created in, but what we can do is allocate that workspace to a Fabric Capacity in a specific region. It’s the Fabric Capacities that can be created in different regions. OneLake is a logical concept, it’s not a specific region-bound construct. It allows you to see your data as one whole, rather than a series of disparate storage accounts.

I’ve (attempted) to explain this in the following image, this is how I understand how OneLake is structured compared to ADLS Gen2 accounts. In OneLake we could have data stored in different regions if there are Workspaces allocated to Fabric Capacities which are provisioned in different regions.

Fabric Capacities

When creating a Fabric Capacity, regardless whether it’s via Azure or through Premium licensing, that capacity will be allocated to a specific region. In the image below I have created 3 Fabric Capacities in 3 different regions. I can allocate a Fabric Workspace to any of those capacities and the data within that workspace will be region-bound to that capacity’s region.

Scenarios

Let’s look at a handful of scenarios covering allocating Fabric Workspaces to capacities.

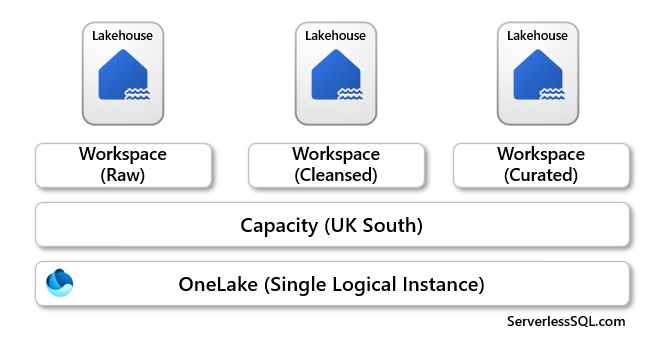

Create Lakehouses in Workspaces Assigned to Fabric Capacity in same Region

In this scenario, 3 Workspaces are allocated to a single Fabric Capacity that is based in the UK South region. All the data (Files and Tables) created within the Lakehouses within those Workspaces will all reside in the UK South region.

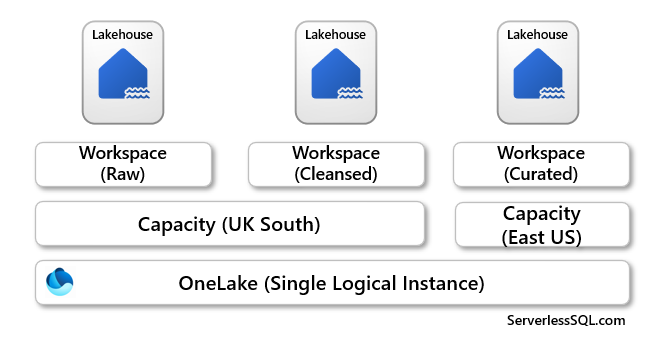

Create Lakehouses in Workspaces Assigned to Fabric Capacities in Different Regions

If we now look at a scenario in which we have the same 3 Workspaces with a Lakehouse in each workspace, except now we have an additional Fabric Capacity that has been created in the East US region. If we allocate the Workspace that contains the Curated Lakehouse to this new capacity, even though it’s in the same Fabric tenant, we will be moving data across regions if we load the Curated Lakehouse from the Cleansed Lakehouse. Not ideal as this may break any data protection, plus there could be egress fees across regions (pricing page here doesn’t show any cost information yet for networking Microsoft Fabric – Pricing | Microsoft Azure) – thanks to Sandeep Pawar for querying egress charge possibility.

If the data is in compliance when moving regions, it may prove useful to have data situated close to where it’s being used.

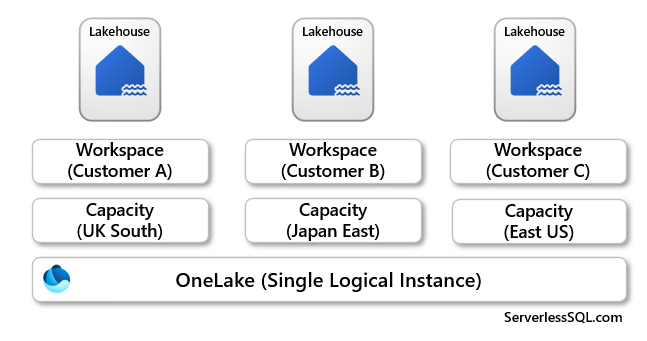

Deliver Capabilities to Customers using Workspaces Assigned to Fabric Capacities in Different Regions

What about delivering global solutions to customers (or global organisations) in the same tenancy? Well, as we can assign Workspaces to Fabric Capacities in different regions, we can do this by creating capacities in the relevant regions.

Can You Use Fabric Workspaces Like ADLS Gen 2 Containers?

If Fabric Workspaces are like Containers in ADLS Gen2, then should we be able to just use them as such? Well not really as Workspaces are a managed resource and this restricts you from being able to use a workspace like a container. E.G. if you create a Workspace and access it using a URL, you won’t be able to just start creating folders and files in the root. Fabric restricts you from accessing the underlying container and just using it adhoc, you must create a Fabric item to work with data.

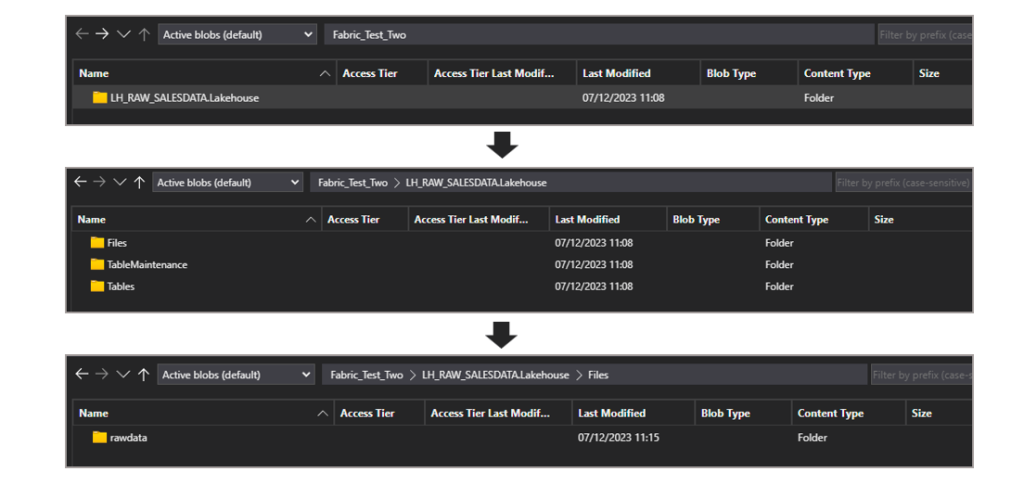

What we can do is create a Lakehouse in the workspace which will appear as a folder in the workspace OneLake structure. There will be 2 folders to work with data, Tables and Files. We still can’t modify these folders, these are managed by Fabric. But then we are able to work with folders and files under these 2 managed folders. In the Files folder we are able to start creating sub-folders and start working with files. E.G if I create a folder and upload a file using Azure Storage Explorer, if I switch to the Fabric UI I will see the new folder and file appear.

Workspace Creation and File Upload Walkthrough

Let’s work through an example now, the prerequisites here are:

- Access to a Fabric/Power BI tenancy

- Ability to create a Workspace in a Power BI/Fabric tenancy

- Permissions to allocate the Workspace to a Fabric capacity

- Permissions to create Fabric items

Create Workspace and Assign to Capacity

- Login to https://app.powerbi.com (or https://app.fabric.microsoft.com)

- Create a new Workspace (for this example don’t put spaces in the Workspace name)

- Allocate this Workspace to a Fabric Capacity (e.g. Trial)

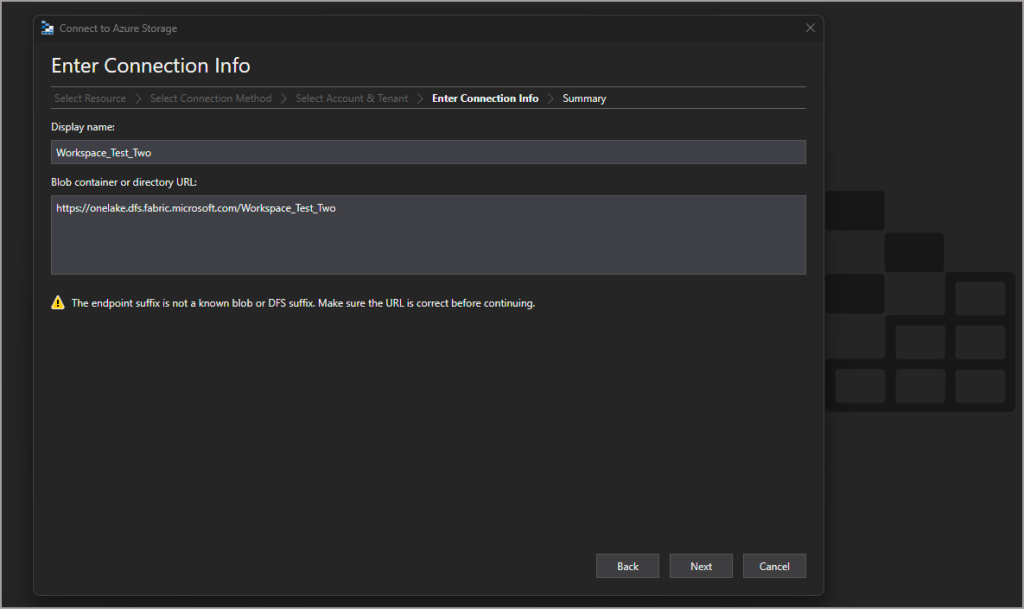

Connect to Workspace using Azure Storage Explorer

- Open Azure Storage Explorer (or download and install from here)

- Open the Connect option (plug icon on the left menu) and select ADLS Gen2 container or directory

- Connect using an appropriate authentication mechanism e.g. OAuth

- In the Blob container or directory URL: text box, enter the URL of the workspace (let’s use the global URI). If there are spaces in the Workspace name then the GUID needs to be used instead (see appendix)

- https://onelake.dfs.fabric.microsoft.com/Workspace_Name

- Click Next then Connect

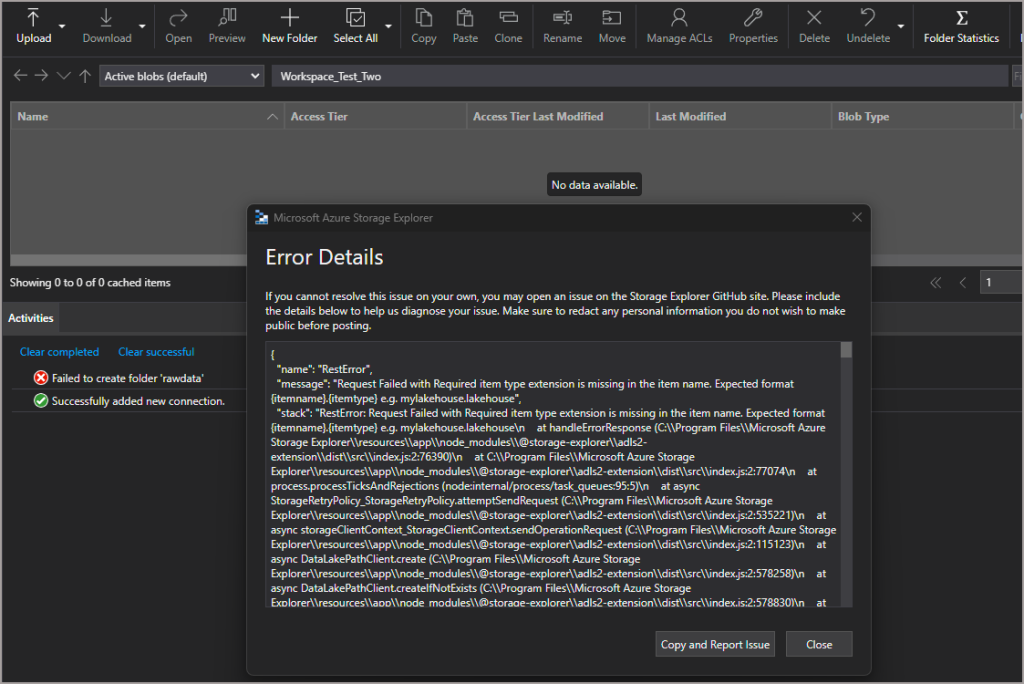

Let’s try and create a folder in the root of the Workspace. We’ll get an error stating we need an item extension like a lakehouse.

Create Lakehouse and Create Folder

Let’s create a new Lakehouse in the Workspace and try creating a folder again.

- Go back to the Workspace, switch to the Data Engineering experience (menu bottom left)

- Click New > Lakehouse and give the lakehouse a name

- Switch back to Azure Storage Explorer and refresh the connection, you should now see the Lakehouse item and can browse down through the folder structure to the Files area

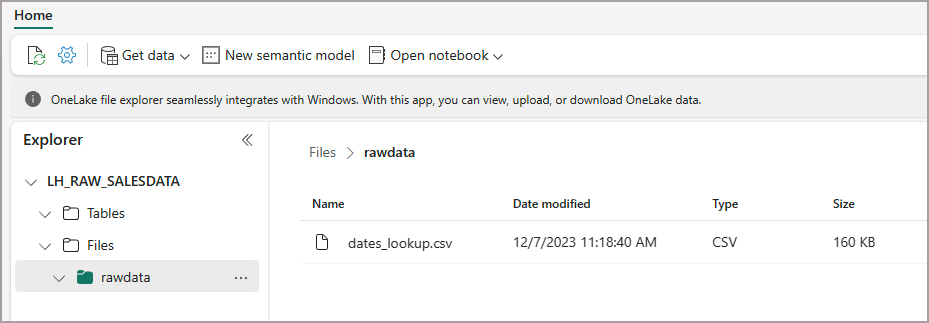

- We can create a new folder and upload a file to this folder

If we go back to the Fabric UI and refresh the Files area then we can see the new folder and any files upload. We do have control to some areas of the Workspace as if it were an ADLS Gen2 container, however we must do it in a Fabric item such as a Lakehouse Tables or Files subfolders.

Appendix

To use the GUID instead of the Workspace name when connecting, you can obtain this from the URL when you are accessing the workspace in the browser:

- https://app.powerbi.com/groups/01f29c49-add1-4a5c-b412-b9e7e16dc031/list?experience=power-bi

1 thought on “Exploring Fabric OneLake vs Azure Data Lake Gen2 Storage Accounts”

Comments are closed.