Elastic Queries with Azure SQL Database and Synapse Analytics Serverless SQL Pools

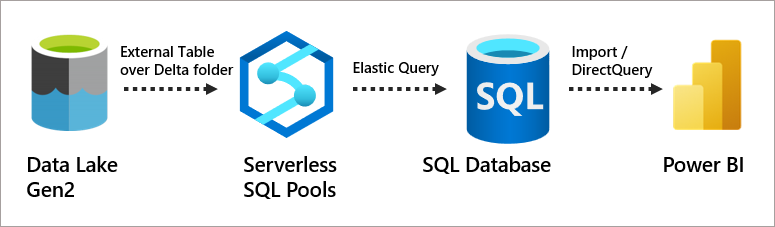

Recently I have been exploring an option to use an Azure SQL Database for a small footprint Data Warehouse and wondered whether I could create an elastic query connection to a Serverless SQL Pools database and query a Delta folder in an Azure Data Lake Gen2. It can be done successfully using an External Table in a Serverless SQL Pools database and creating the same table in the Azure SQL Database. Now the data within the Azure SQL Database can be queried alongside the data within the Data Lake using the Serverless SQL Pools engine.

The goal here is to query the internal data and external data via a single surface area – the Azure SQL Database

In this blog scenario we’ll use an example database, there is an Azure SQL Database that contains AdventureWorks Dimension and Fact tables with less than 10GB of data overall (not enough data for a Dedicated SQL Pool!). There is also an Azure Data Lake Gen2 account that contains around 1.2 billion web telemetry events about users browsing/purchasing behaviour on the (fictious) website. This data is stored in Delta format (Parquet files). The goal here is to query this data via a single surface area without moving the web telemetry data and use the Serverless SQL Pools engine to do the “heavy lifting” when querying data in the data lake.

The SQL queries are on GitHub here.

What is Elastic Query?

Elastic Query allows querying remote tables on multiple databases using T-SQL commands. A connection can be made from an Azure SQL database to another Azure SQL Database and T-SQL queries can then be run across the databases. You can read more here.

Elastic Query functionality is included in the cost of the Azure SQL Database and is supported on any tier.

Basic Solution

Here in its most simple form is the architecture, where Power BI (or any SQL query tool) connects to the Azure SQL Database only, this SQL Database holds imported data and also the external tables available in the Serverless SQL Pools database. Serverless SQL Pools itself connects to the underlying data, in Delta format, in Azure Data Lake Gen2.

Solution Steps

The following steps cover the walkthrough, we need to create objects in the Serverless SQL Pools service first, then switch to an Azure SQL Database to complete the Elastic Query setup.

- Serverless SQL Pools

- Create a Serverless SQL Pools database

- Create an External Table over Delta data in Azure Data Lake Gen2

- Create SQL Login and User in Serverless SQL Pools

- Grant SELECT on External Table to SQL User

- Grant REFERENCE on database scoped credential used in External Table to SQL User

- Azure SQL Database

- Create Master Key

- Create a Database Scoped Credential using the SQL User created in Serverless

- Create External Data Source (URL of Serverless, Credential)

- Create External Table in SQL Database

Solution Walkthough

We’ll now step through the process to allow Azure SQL Database to send queries to Serverless SQL Pools and retrieve the results. This walkthrough assumes you have an Azure Synapse Analytics workspace already setup, here is a Getting Started with Serverless SQL Pools article which includes setting up a new Synapse workspace. You will also require an Azure SQL Database. In this scenario, the Synapse workspace and SQL Database are all co-located in the same region.

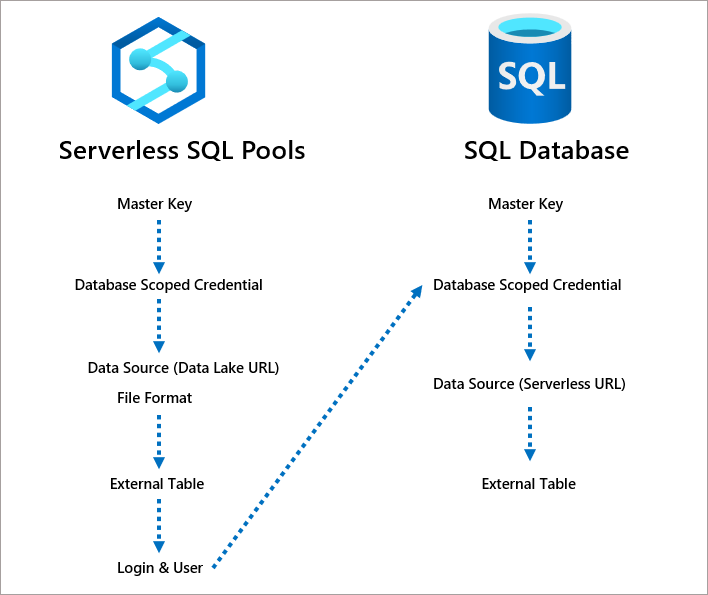

Object Dependencies

The following is a SQL object dependency diagram showing the relationship between each object created.

Serverless SQL Pools Setup

Let’s create a new Serverless SQL Pools database, this step includes creating a data source to the Azure Data Lake Gen2 account, the associated Managed Identity security to allow Serverless SQL Pools to access the data, and creating the External Table over the Delta data.

Create SQL Objects

The following T-SQL can be run in Synapse Studio on the inbuilt Serverless SQL Pools service.

--create login

USE MASTER

CREATE LOGIN elasticuser WITH PASSWORD = '<strong_password>'

--create new database

CREATE DATABASE SQLElasticQuery;

GO

USE SQLElasticQuery;

GO

--create objects for connecting to data lake

--Create a schema to hold our objects

CREATE SCHEMA LDW authorization dbo;

--encryption to allow authentication

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<strong_password>';

--Create a credential using Managed Identity

CREATE DATABASE SCOPED CREDENTIAL DataLakeManagedIdentity

WITH IDENTITY='Managed Identity'

--Create a data source for use in queries

CREATE EXTERNAL DATA SOURCE ExternalDataSourceDataLakeUKMI

WITH (

LOCATION = 'https://<storageaccount>.dfs.core.windows.net/<container>',

CREDENTIAL = DataLakeManagedIdentity

);

--Create Delta file format

CREATE EXTERNAL FILE FORMAT SynapseDeltaFormat

WITH (

FORMAT_TYPE = DELTA

);

--enable support for UTF8

ALTER DATABASE SQLDatabash COLLATE Latin1_General_100_BIN2_UTF8;

Create External Table over Delta

We can now create an External Table over the Delta data in the Data Lake using the Data Source and File Format created in the previous step. The Location specifies the root folder of the Delta data.

--create external table over Delta

CREATE EXTERNAL TABLE LDW.WebTelemetryDelta

(

UserID varchar(20),

EventType varchar(100),

ProductID varchar(100),

URL varchar(100),

Device varchar(50),

SessionViewSeconds int,

MessageKey varchar(100),

EventYear int,

EventMonth int,

EventDate date

)

WITH (

LOCATION = 'cleansed/webtelemetrydeltakey',

DATA_SOURCE = ExternalDataSourceDataLakeUKMI,

FILE_FORMAT = SynapseDeltaFormat

)

GO

Create SQL Objects required for Elastic Query

Once the External Table is created, we can now create a User in the database and grant SELECT permissions on the table. We also need to provide access to the Managed Identify credential that Serverless SQL Pools uses to connect to the data lake account (I have an ongoing discussion with Microsoft about the process of securing External Tables…I’ll keep you posted).

--switch to the Serverless databas USE SQLElasticQuery; GO --create user from login CREATE USER elasticuser FROM LOGIN elasticuser; --grant select on the Delta table GRANT SELECT ON LDW.WebTelemetryDelta to elasticuse --grant the sql user access to the credential to access the data lake GRANT REFERENCES ON DATABASE SCOPED CREDENTIAL::[DataLakeManagedIdentity] TO [elasticuser];

Once the objects are in place, we can now switch to the Azure SQL Database and create the necessary objects there.

Azure SQL Database Setup

Once the Serverless SQL Pools objects have been created, we can now create the necessary objects in the appropriate Azure SQL Database. In this scenario there is an Azure SQL Database with existing Dimension and Fact tables. We’ll create a master key, a database scoped credential that mirrors the login in Serverless SQL Pools, then a data source that references the Serverless SQL Pools endpoint.

--run on azure sql database if a master key doesn't exist

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<strong_password>';

--create a credential with the same details as the login created earlier

--in Serverless SQL Pools

CREATE DATABASE SCOPED CREDENTIAL ElasticDBQueryCredV2

WITH IDENTITY = 'elasticuser',

SECRET = '<strong_password>';

--now create a data source pointing to the Serverless SQL Pools endpoint and database

--use the credential created in the earlier step

CREATE EXTERNAL DATA SOURCE ExternalDataSourceDataLakeUKMIV2 WITH

(TYPE = RDBMS,

LOCATION = 'synapse-ondemand.sql.azuresynapse.net',

DATABASE_NAME = 'SQLElasticQuery',

CREDENTIAL = ElasticDBQueryCredV2

);

Once the security mechanism is in place, we can create an External Table with the same schema as the table in the Serverless SQL Pools database.

--create schema to hold tables

CREATE SCHEMA LDW AUTHORIZATION dbo;

GO

--create external table referencing the data source created earlier

CREATE EXTERNAL TABLE LDW.WebTelemetryDelta

(

UserID varchar(20),

EventType varchar(100),

ProductID varchar(100),

URL varchar(100),

Device varchar(50),

SessionViewSeconds int,

MessageKey varchar(100),

EventYear int,

EventMonth int,

EventDate date

)

WITH (

DATA_SOURCE = ExternalDataSourceDataLakeUKMIV2

);

GO

Now that all the required objects are in place in both the Serverless SQL Pools database and the Azure SQL Database, we can start querying.

Querying

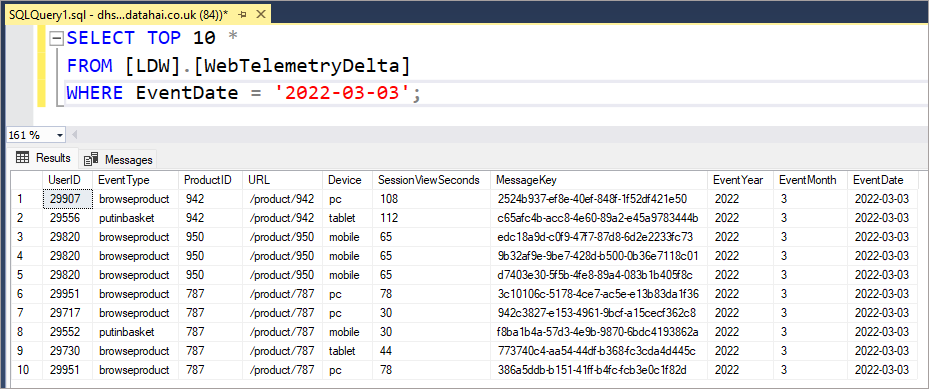

We can run a SELECT statement on the External Table within the Azure SQL Database and observer the results, we’ll also check the Monitor > SQL Requests area to see the SQL requests sent to the Serverless SQL Pools service. The following SQL queries were run using SSMS connected to the Azure SQL Database using an Active Directory login.

--show 10 rows of the source data SELECT TOP 10 * FROM [LDW].[WebTelemetryDelta] WHERE EventDate = '2022-03-03';

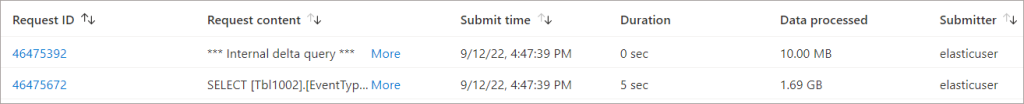

Let’s run an aggregate query and then check the monitoring area in Synapse Studio.

--aggregate the web telemetry data using eventtype and device SELECT EventType, Device, SUM(CAST(SessionViewSeconds AS BIGINT)) AS TotalSessionViewSeconds, COUNT(*) AS TotalEventCount FROM LDW.WebTelemetryDelta GROUP BY EventType, Device;

The SQL generated in the Request content field shows that the aggregate query was sent to Serverless SQL Pools successfully.

--SQL generated by running the elastic query from Azure SQL Database SELECT [Tbl1002].[EventType] [Col1036], [Tbl1002].[Device] [Col1037], SUM(CONVERT(bigint,[Tbl1002].[SessionViewSeconds],0)) [Expr1003], COUNT(*) [Expr1004] FROM [LDW].[WebTelemetryDelta] [Tbl1002] GROUP BY [Tbl1002].[EventType], [Tbl1002].[Device]

If we JOIN an internal and external table in a GROUP BY query, we’ll see that the query sent to Serverless SQL Pools is requesting each row. This is hugely inefficient as the query is not using the Serverless SQL Pools engine other than to pull the data row-by-row…the Azure SQL Database will then need to perform the GROUP BY operation!

--run aggregate query SELECT DP.EnglishProductCategoryName AS ProductCategory, COUNT(WT.MessageKey) AS TotalEventCount FROM DW.vwProductHierarchy DP INNER JOIN LDW.WebTelemetryDelta WT ON DP.ProductKey + 400 = WT.ProductID GROUP BY DP.EnglishProductCategoryName

As we can see, the SQL query sent to Serverless SQL Pools is requesting each row of data.

--generated SQL

SELECT

[Tbl1007].[MessageKey] [Col1018],

CONVERT(int,[Tbl1007].[ProductID],0) [Expr1012]

FROM [LDW].[WebTelemetryDelta] [Tbl1007]

What we can do is SELECT the data from the external table and then JOIN to the internal table, using a CTE or Temp table. In this example we’ll use a CTE to hold the results from the Serverless SQL Pools database.

--aggregate product category and name ;WITH webtelemetry AS ( SELECT ProductID, COUNT(*) AS TotalEventCount FROM LDW.WebTelemetryDelta GROUP BY ProductID ) SELECT DP.EnglishProductCategoryName AS ProductCategory, DP.EnglishProductName AS ProductName, SUM(WT.TotalEventCount) AS TotalEventCount FROM DW.vwProductHierarchy DP INNER JOIN webtelemetry WT ON DP.ProductKey + 400 = WT.ProductID GROUP BY DP.EnglishProductCategoryName, DP.EnglishProductName --aggregate product category ;WITH webtelemetry AS ( SELECT ProductID, COUNT(*) AS TotalEventCount FROM LDW.WebTelemetryDelta GROUP BY ProductID ) SELECT DP.EnglishProductCategoryName AS ProductCategory, SUM(WT.TotalEventCount) AS TotalEventCount FROM DW.vwProductHierarchy DP INNER JOIN webtelemetry WT ON DP.ProductKey + 400 = WT.ProductID GROUP BY DP.EnglishProductCategoryName

If we look at the SQL generated and run on the Serverless SQL Pools database, we can see an aggregate query being run, which is the desired result.

--SQL generated and run on Serverless SQL Pools database

SELECT

[Expr1009],

CONVERT(int,[Col1032],0) [Expr1015]

FROM (

SELECT [Tbl1008].[ProductID] [Col1032],

COUNT(*) [Expr1009]

FROM [LDW].[WebTelemetryDelta] [Tbl1008]

GROUP BY [Tbl1008].[ProductID]

) Qry1033

Using Elastic Query to load Azure SQL Database

We also have the option of using the elastic query link to INSERT INTO…SELECT data from the Serverless SQL Pools External Table into an internal table in the Azure SQL Database. Perhaps aggregated web telemetry data could be useful if sat alongside the already imported Dimensions and Facts.

Conclusion

In this blog post we’ve looked at how to setup an elastic query between an Azure SQL Database and a Serverless SQL Pools database to allow querying of data in an Azure Data Lake Gen2 account. This gives us an option when looking at using Azure SQL Database to hold data internally and also use the Serverless SQL Pools engine to process data in a Data Lake.

References

- Elastic query overview – Azure SQL Database | Microsoft Docs

- Get started with cross-database queries – Azure SQL Database | Microsoft Docs

- Use external tables with Synapse SQL – Azure Synapse Analytics | Microsoft Docs

- Getting Started with Azure Synapse Analytics SQL Serverless – Serverless SQL

- Query Delta Lake format using serverless SQL pool – Azure Synapse Analytics | Microsoft Docs